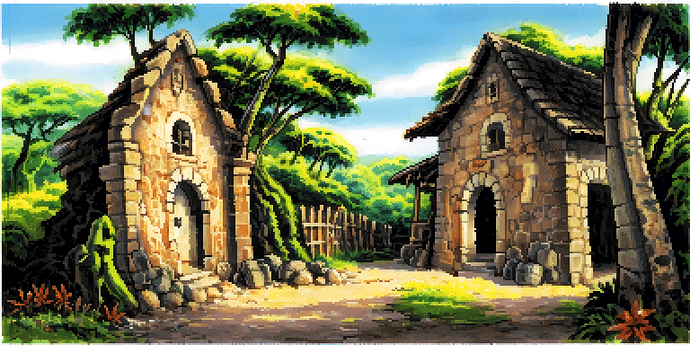

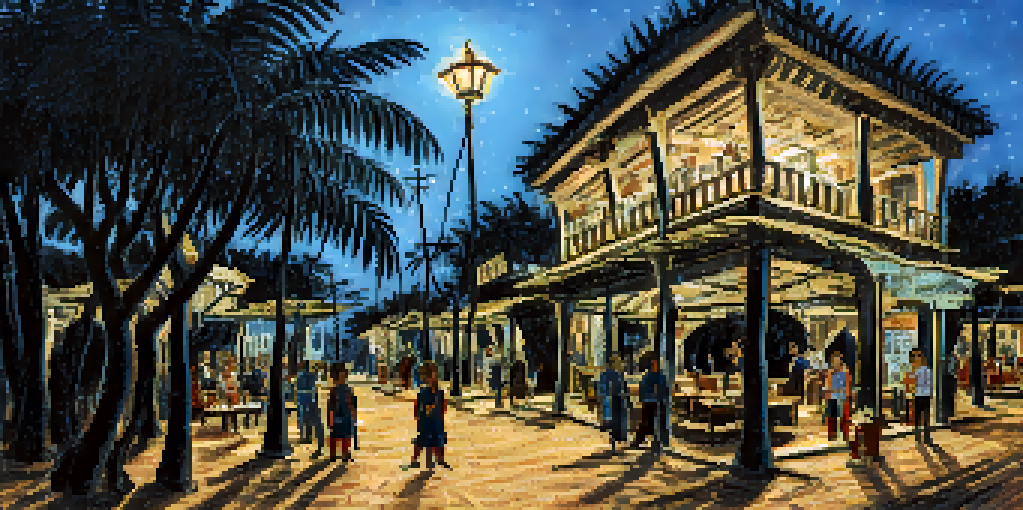

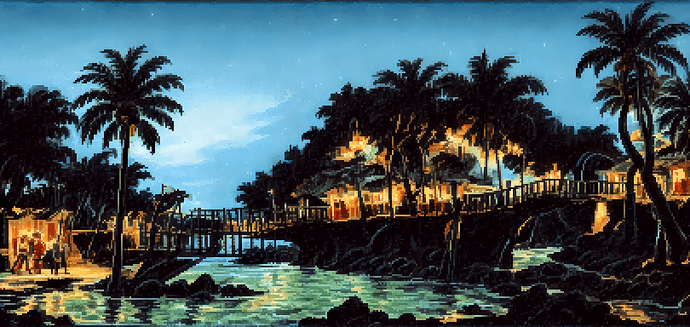

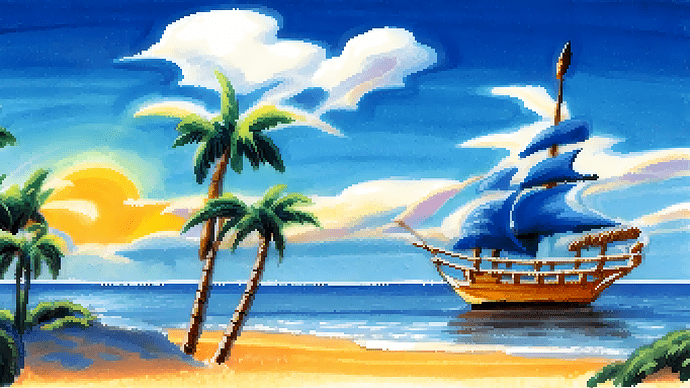

These are ridiculously beautiful, AI comes through for us again!

Though I wonder what happens when these are made smaller for something like AGS, 320x240, etc.

Which AI are you using for pixelization?

stable diffusion plugin: GitHub - AUTOMATIC1111/stable-diffusion-webui-pixelization: stable-diffusion-webui-pixelization

It is possible to generate the image at a small size so we don’t need to scale it down later?

I’m using leonardo to generate. in a sense we could set leonardo to output at a very low res, but I doubt it would be sharp enough. I’ll try later…

Wow, with results like that you could simply make the graphics style a toggle.

higher res:

The last ones above are kind of depressing, because they seem even better than the original images used to train…

.

.

…

.

.

.

.

generated from pixel art pictures of scabb island: (it produced fuzzy images, then repixelized by me, mostly to improve the contrast)

this one is really amazing. what a great setting. higher res version: (above there’s a lower res)

I got PowerQuest, just had to install Unity (which installs the Unity Hub, and then you install the 2020 version of Unity which works with PowerQuest), then imported PowerQuest.

Now just got to learn how it works… see you in a year!

It’s very interesting that pixelation improves the colors as well. In a way that I couldn’t reproduce by just changing the curves (or brightness/contrast).

.

.

.

.

I guess it trained on VGA.

As impressive as some of those results are, the currentl approach to AI and the outcome still reminds me of monkeys with typewriters (or monkeys with scissors and glue, in case of images). Sure, they might have gained access to a dictionary now, but there’s neither understanding nor purpose in those creations.

Looking at all those images, I guess some might make excellent backdrops for an adventure game scene, if you ignore details like a lamp on top of a tree trunk or a tree growing out of a boat. A few might even work as in-between places, screens that tie different locations together without much bearing for the plot or puzzles. But getting a scene that’s designed to facilitate specific gameplay, from the placement of interactive objects to the spaces for characters to navigate the room … I don’t think you can get the monkeys to paint that just yet ![]() .

.

Still, impressive! And I guess you could attempt to piece workable rooms together from multiple images, if their style is consistent enough. And I hear the monkeys are really good with their scissors, so separating out objects from images shouldn’t be such a big deal these days.

At the moment though I feel like we’re in the “let’s see how close to the classic background styles we can get” phase and just doing general scenes.

I think it’ll become more streamlined/specific once someone has a game idea and is like, “ok I need a town in the style of the Wild West, and in this town one scene will be the entrance to a bar, and then there will be another scene that is the inside of the bar with a door at the back, and through that door is another scene showing the back of the building and there is a wooden shack there”…

The logic of the story/settings will link them together and make more sense and you can ask for it to include some specific objects in a room.

I think the closest to that so far were Seguso’s backgrounds of a cemetery and then some caves and tombs and stuff in the tombs, like I can see those all being a section of a game.

You can also give a sketch (plus a text description) and it will try to remake it with the style you have trained it on. In this case, Monkey 2 hires (the only few images I found).

sketch I gave:

output:

pixelized:

Does some of you have scummvm savegames for monkey 2, for part 2? So I can train it with more daytime pictures of monkey2?

…

.

…

ok, but the sketch had a decent quality. what if I make a worse one myself?

.

.

.

.

ok, this is scary: I very quickly drew a sketch, with flat colors. I did the same, this time activating “control net” (this means it respects the lines I gave, but invents the colors)

output:

pixel:

another output:

pixelized:

one more:

without controlnet, it doesn’t invent colors:

I will update this post…come back later.

.

.

.

I don’t know what I am doing anymore, but these are nice:

Right not the main problem for me is this thing is not controllable. In my sketch, I need to either specify the colors perfectly, or if I let the AI decide the colors, they aren’t controllable, so I can’t impose the same palette on several pictures.

.

.

.

Given the art generation is so hard to control, it is clear we need to first pick the backgrounds, and then build a story around them…

we can try to add specific items to backgrounds, by doing some cut and paste.

Would that work? ![]() It could be difficult to build a (thrilling) story around the art. Plus: You still have to tell the AI what you would like to see on the graphics.

It could be difficult to build a (thrilling) story around the art. Plus: You still have to tell the AI what you would like to see on the graphics.

This seems the most promising approach: If you have a story, draw the backgrounds you need and feed them to the AI. The results are better than describing the content, IMHO.