Maybe see if it can make some maps using the SierraLucas dataset you were using?

Stuff like an island map, a theme park map, a map with town, forest, mountains, etc.

I’ve used these 40 pictures: https://drive.google.com/drive/folders/1lfxMM5jzQgGV23Rb2K5IG6XvtSRwUQEo?usp=sharing

from Laura Bow 2, KQ5, KQ6.

I could only pick 40, so I chose those ones.

That’s probably because I enabled a feature of leonardo.ai called “prompt magic”, which makes it understand things that are not in the pictures. It seems that this way it manages to draw from a more general knowledge base. But the downside is that the style of the result moves further away from the original images.

PS: to everyone: this was useful: if you want to make images square, adding black borders, you can install imagemagick and then from command line:

for %I in (*.jpg *.png) do magick "%I" -background black -gravity center -extent "%[fx:max(w,h)]x%[fx:max(w,h)]" "output_%I"

to train the AI, you need to have square pictures.

Maybe it can do maps using the backgrounds dataset from before?

If it knows what a map is, then it would just use the painting style of the backgrounds to draw the maps, maybe.

If that doesn’t work, then yeah, we’ll have to collect maps.

I tried with the same dataset:

with prompt magic:

without prompt magic:

more complex query, with prompt magic:

same complex query, without prompt magic: (this shows the training set is not enough)

I think we cannot avoid some photoshop composition by hand, if we want to make something that can actually be shown ![]()

I like some of the prompt magic ones, I think this one is very useable -

Actually I like this one, but it’s a bit empty. I would just draw the relevant stuff on top of it…

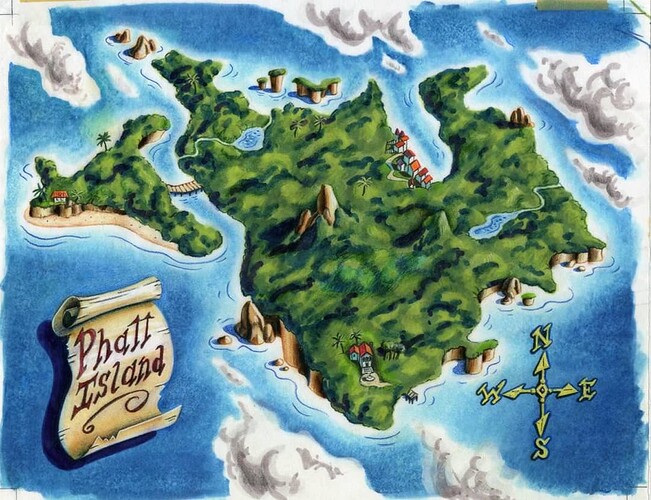

Anyway, I’ll try to train it with the actual Monkey 1 and 2 maps, in pixel art, and see what happens. And then with the special editions, to see what changes.

Now I would really like to know what “Tarolto” is… ![]()

Anyway, fascinating results! They are indeed usable. Even the last two ones in this (your) post: They are looking like maps from a strategy game. I really wonder on which training data the results are based of.

I’m very curious if the AI (re-)uses the shapes of these islands in some way.

You would think changing the look of the 9 verbs in AGS would be easy. It is not.

It’s not crazily difficult, but things like that would definitely create a bottleneck in an otherwise streamlined AI-assisted game production. And I haven’t even begun to try to get Guybrush into the game.

It might all be a bit easier with Adventure Creator, though that costs money (€79.84). Apparently it goes on sale quite often though.

If I could, I’d gift you my copy of AC, because I don’t use it and I don’t think I’ll ever again.

GUIs in AGS are a bit obnoxious. I made my own for my games but then I moved out of it. If you want an alternative you can try PowerQuest - which is basically AGS under Unity.

(I don’t understand why this doesn’t give as good results as the rest)

downscaled by AI: (using plugin GitHub - AUTOMATIC1111/stable-diffusion-webui-pixelization: stable-diffusion-webui-pixelization). choosing pixel size = 5 , which results in width = 307 pixels:

downscaled with krita to 307 width, nearest neighbor:

Thanks for the tip!

I had seen that name floating about and didn’t know what it was, I think I even saw a MI2 template for it, so I’ll definitely look into that.